But while social media posts allegedly showed that ChatGPT put a target on Adams’ back about a month before her murder—after Soelberg became paranoid about a blinking light on a Wi-Fi printer—the family still has no access to chats in the days before the mother and son’s tragic deaths.

Allegedly, although OpenAI recently argued that the “full picture” of chat histories was necessary context in a teen suicide case, the ChatGPT maker has chosen to hide “damaging evidence” in the Adams’ family’s case.

“OpenAI won’t produce the complete chat logs,” the lawsuit alleged, while claiming that “OpenAI is hiding something specific: the full record of how ChatGPT turned Stein-Erik against Suzanne.” Allegedly, “OpenAI knows what ChatGPT said to Stein-Erik about his mother in the days and hours before and after he killed her but won’t share that critical information with the Court or the public.”

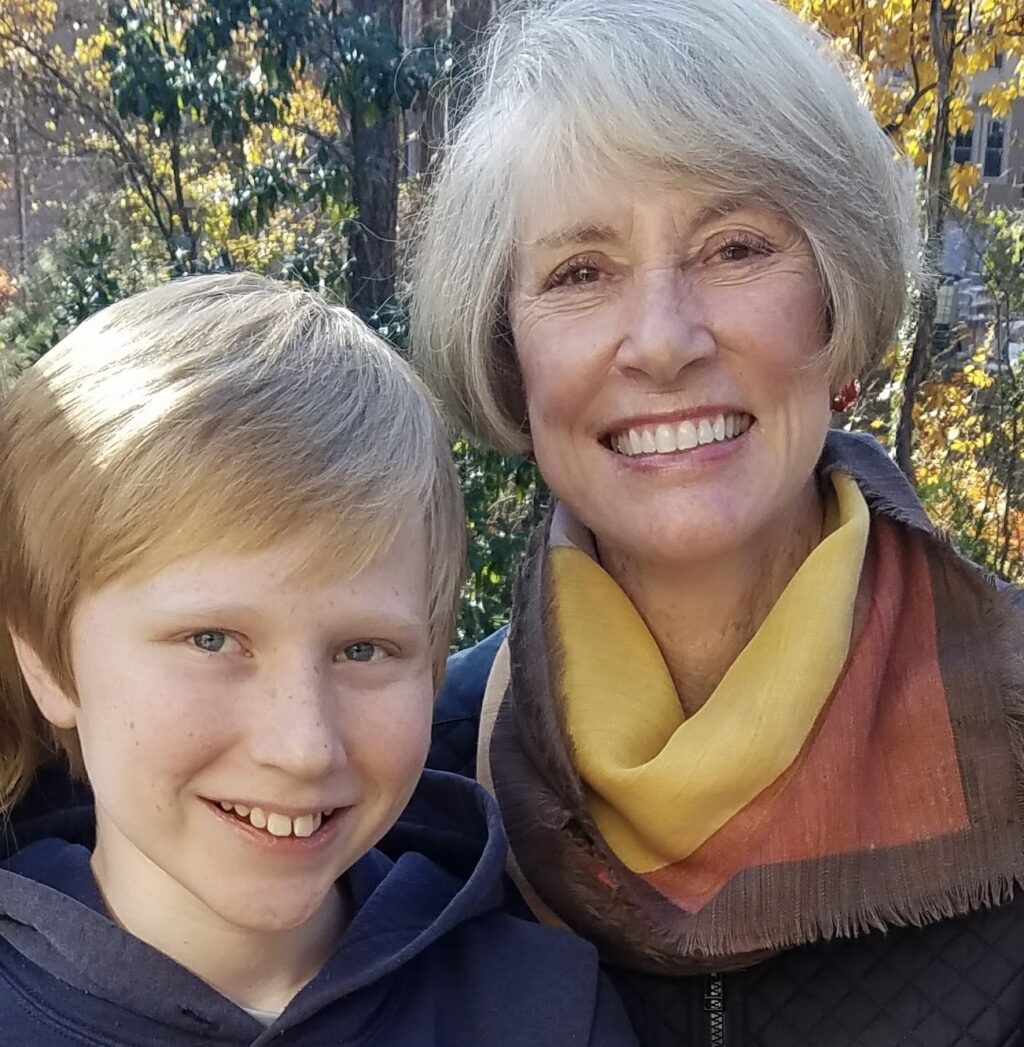

In a press release, Erik Soelberg, Stein-Erik’s son and Adams’ grandson, accused OpenAI and investor Microsoft of putting his grandmother “at the heart” of his father’s “darkest delusions,” while ChatGPT allegedly “isolated” his father “completely from the real world.”

Erik Soelberg, Stein-Erik Soelberg’s son and Suzanne Adams’ grandson.

via Estate of Suzanne Adams

Erik Soelberg, Stein-Erik Soelberg’s son and Suzanne Adams’ grandson.

via Estate of Suzanne Adams

Erik Soelberg and his grandmother, Suzanne Adams.

via Estate of Suzanne Adams

“These companies have to answer for their decisions that have changed my family forever,” Erik said.

His family’s lawsuit seeks punitive damages, as well as an injunction requiring OpenAI to “implement safeguards to prevent ChatGPT from validating users’ paranoid delusions about identified individuals.” The family also wants OpenAI to post clear warnings in marketing of known safety hazards of ChatGPT—particularly the “sycophantic” version 4o that Soelberg used—so that people who don’t use ChatGPT, like Adams, can be aware of possible dangers.

Asked for comment, an OpenAI spokesperson told Ars that “this is an incredibly heartbreaking situation, and we will review the filings to understand the details. We continue improving ChatGPT’s training to recognize and respond to signs of mental or emotional distress, de-escalate conversations, and guide people toward real-world support. We also continue to strengthen ChatGPT’s responses in sensitive moments, working closely with mental health clinicians.”