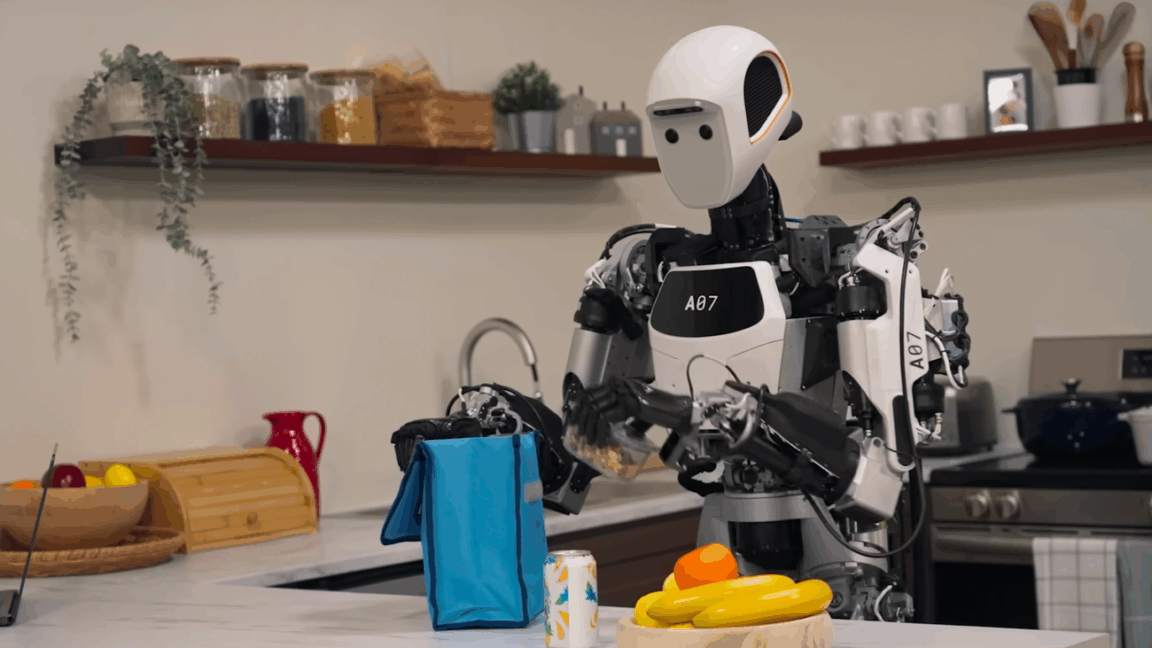

We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots. After announcing Gemini Robotics earlier this year, Google DeepMind has now revealed a new on-device VLA (vision language action) model to control robots. Unlike the previous release, there’s no cloud component, allowing robots to operate with full autonomy.

Carolina Parada, head of robotics at Google DeepMind, says this approach to AI robotics could make robots more reliable in challenging situations. This is also the first version of Google’s robotics model that developers can tune for their specific uses.

Robotics is a unique problem for AI because, not only does the robot exist in the physical world, but it also changes its environment. Whether you’re having it move blocks around or tie your shoes, it’s hard to predict every eventuality a robot might encounter. The traditional approach of training a robot on action with reinforcement was very slow, but generative AI allows for much greater generalization.

“It’s drawing from Gemini’s multimodal world understanding in order to do a completely new task,” explains Carolina Parada. “What that enables is in that same way Gemini can produce text, write poetry, just summarize an article, you can also write code, and you can also generate images. It also can generate robot actions.”

General robots, no cloud needed

In the previous Gemini Robotics release (which is still the “best” version of Google’s robotics tech), the platforms ran a hybrid system with a small model on the robot and a larger one running in the cloud. You’ve probably watched chatbots “think” for measurable seconds as they generate an output, but robots need to react quickly. If you tell the robot to pick up and move an object, you don’t want it to pause while each step is generated. The local model allows quick adaptation, while the server-based model can help with complex reasoning tasks. Google DeepMind is now unleashing the local model as a standalone VLA, and it’s surprisingly robust.