Ars Technica

On Thursday, researchers from Google announced a new generative AI model called MusicLM that can create 24 KHz musical audio from text descriptions, such as “a calming violin melody backed by a distorted guitar riff.” It can also transform a hummed melody into a different musical style and output music for several minutes.

MusicLM uses an AI model trained on what Google calls “a large dataset of unlabeled music,” along with captions from MusicCaps, a new dataset composed of 5,521 music-text pairs. MusicCaps gets its text descriptions from human experts and its matching audio clips from Google’s AudioSet, a collection of over 2 million labeled 10-second sound clips pulled from YouTube videos.

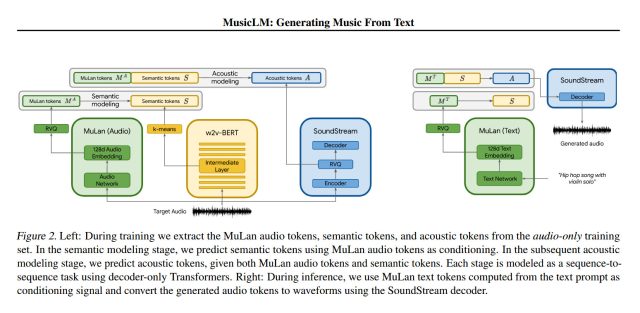

Generally speaking, MusicLM works in two main parts: first, it takes a sequence of audio tokens (pieces of sound) and maps them to semantic tokens (words that represent meaning) in captions for training. The second part receives user captions and/or input audio and generates acoustic tokens (pieces of sound that make up the resulting song output). The system relies on an earlier AI model called AudioLM (introduced by Google in September) along with other components such as SoundStream and MuLan.

Google claims that MusicLM outperforms previous AI music generators in audio quality and adherence to text descriptions. On the MusicLM demonstration page, Google provides numerous examples of the AI model in action, creating audio from “rich captions” that describe the feel of the music, and even vocals (which so far are gibberish). Here is an example of a rich caption that they provide:

Slow tempo, bass-and-drums-led reggae song. Sustained electric guitar. High-pitched bongos with ringing tones. Vocals are relaxed with a laid-back feel, very expressive.

Google also shows off MusicLM’s “long generation” (creating five-minute music clips from a simple prompt), “story mode” (which takes a sequence of text prompts and turns it into a morphing series of musical tunes), “text and melody conditioning” (which takes a human humming or whistling audio input and changes it to match the style laid out in a prompt), and generating music that matches the mood of image captions.

Google Research

Further down the example page, Google dives into MusicLM’s ability to re-create particular instruments (e.g., flute, cello, guitar), different musical genres, various musician experience levels, places (escaping prison, gym), time periods (a club in the 1950s), and more.

AI-generated music isn’t a new idea by any stretch, but AI music generation methods of previous decades often created musical notation that was later played by hand or through a synthesizer, whereas MusicLM generates the raw audio frequencies of the music. Also, in December, we covered Riffusion, a hobby AI project which can similarly create music from text descriptions, but not at high fidelity. Google references Riffusion in its MusicLM academic paper, saying that MusicLM surpasses it in quality.

In the MusicLM paper, its creators outline potential impacts of MusicLM, including “potential misappropriation of creative content” (i.e., copyright issues), potential biases for cultures underrepresented in the training data, and potential cultural appropriation issues. As a result, Google emphasizes the need for more work on tackling these risks, and they’re holding back the code: “We have no plans to release models at this point.”

Google’s researchers are already looking ahead toward future improvements: “Future work may focus on lyrics generation, along with improvement of text conditioning and vocal quality. Another aspect is the modeling of high-level song structure like introduction, verse, and chorus. Modeling the music at a higher sample rate is an additional goal.”

It’s probably not too much of a stretch to suggest that AI researchers will continue improving music generation technology until anyone can create studio-quality music in any style just by describing it—although no one can yet predict exactly when that goal will be attained or how exactly it will impact the music industry. Stay tuned for further developments.