Hiding in the light

Captured video in a conference room with two coded light sources.

Peter Michael et al., 2025

Captured video in a conference room with two coded light sources.

Peter Michael et al., 2025

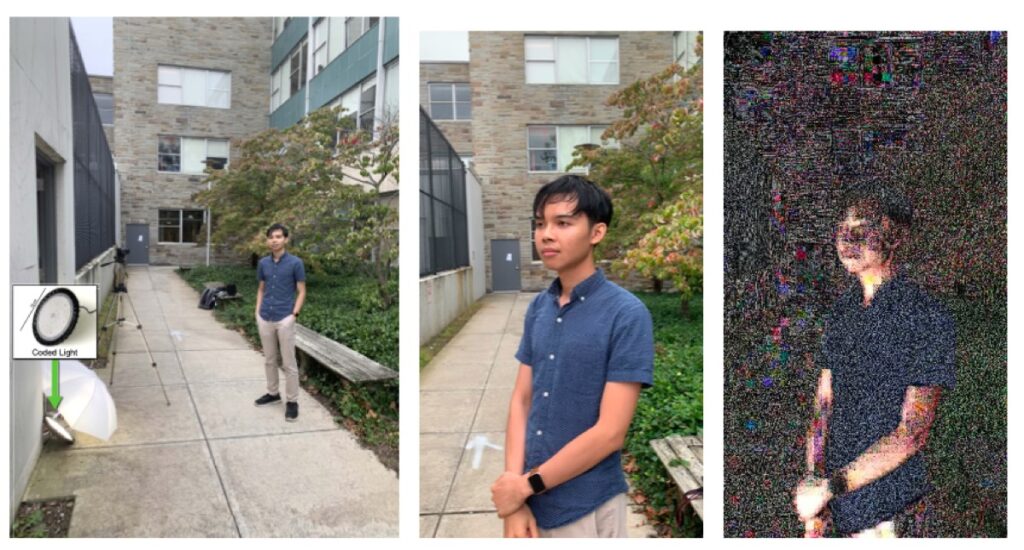

Setup for outdoor capture

Peter Michael et al., 2025

Previously, the Cornell team had figured out how to make small changes to specific pixels to tell if a video had been manipulated or created by AI. But its success depended on the creator of the video using a specific camera or AI model. Their new method, “noise-coded illumination” (NCI), addresses those and other shortcomings by hiding watermarks in the apparent noise of light sources. A small piece of software can do this for computer screens and certain types of room lighting, while off-the-shelf lamps can be coded via a small attached computer chip.

“Each watermark carries a low-fidelity time-stamped version of the unmanipulated video under slightly different lighting. We call these code videos,” Davis said. “When someone manipulates a video, the manipulated parts start to contradict what we see in these code videos, which lets us see where changes were made. And if someone tries to generate fake video with AI, the resulting code videos just look like random variations.” Because the watermark is designed to look like noise, it’s difficult to detect without knowing the secret code.

The Cornell team tested their method with a broad range of types of manipulation: changing warp cuts, speed and acceleration, for instance, and compositing and deep fakes. Their technique proved robust to things like signal levels below human perception; subject and camera motion; camera flash; human subjects with different skin tones; different levels of video compression; and indoor and outdoor settings.

“Even if an adversary knows the technique is being used and somehow figures out the codes, their job is still a lot harder,” Davis said. “Instead of faking the light for just one video, they have to fake each code video separately, and all those fakes have to agree with each other.” That said, Davis added, “This is an important ongoing problem. It’s not going to go away, and in fact it’s only going to get harder,” he added.

DOI: ACM Transactions on Graphics, 2025. 10.1145/3742892 (About DOIs).